The new Always Encrypted feature in SQL Server 2016 adds some overhead to the storage requirements of a column. I wanted to do some analysis to determine just how much overhead is incurred for various data types. This test was run against CTP 3.2.

Methodology

After creating a database along with a column master key and the column encryption key, I created four tables to store the encrypted data.

if exists (select * from sys.tables where name = 'VarcharAnalysis') drop table VarcharAnalysis; create table VarcharAnalysis ( CharCount int null, CharData varchar(8000) collate Latin1_General_BIN2 encrypted with (column_encryption_key = [CEK_Auto1], encryption_type = Deterministic, algorithm = 'AEAD_AES_256_CBC_HMAC_SHA_256') null ); create clustered index cl_VarcharAnalysis on VarcharAnalysis (CharCount); if exists (select * from sys.tables where name = 'NVarcharAnalysis') drop table NVarcharAnalysis; create table NVarcharAnalysis ( CharCount int null, CharData nvarchar(4000) collate Latin1_General_BIN2 encrypted with (column_encryption_key = [CEK_Auto1], encryption_type = Deterministic, algorithm = 'AEAD_AES_256_CBC_HMAC_SHA_256') null ); create clustered index cl_NVarcharAnalysis on NVarcharAnalysis (CharCount); if exists (select * from sys.tables where name = 'VarbinaryAnalysis') drop table VarbinaryAnalysis; create table VarbinaryAnalysis ( ByteCount int null, BinaryData varbinary(8000) encrypted with (column_encryption_key = [CEK_Auto1], encryption_type = Deterministic, algorithm = 'AEAD_AES_256_CBC_HMAC_SHA_256') null ); create clustered index cl_VarbinaryAnalysis on VarbinaryAnalysis (ByteCount); if exists (select * from sys.tables where name = 'FixedSizeAnalysis') drop table FixedSizeAnalysis; create table FixedSizeAnalysis ( TinyIntData tinyint encrypted with (column_encryption_key = [CEK_Auto1], encryption_type = Deterministic, algorithm = 'AEAD_AES_256_CBC_HMAC_SHA_256') null, SmallIntData smallint encrypted with (column_encryption_key = [CEK_Auto1], encryption_type = Deterministic, algorithm = 'AEAD_AES_256_CBC_HMAC_SHA_256') null, IntData int encrypted with (column_encryption_key = [CEK_Auto1], encryption_type = Deterministic, algorithm = 'AEAD_AES_256_CBC_HMAC_SHA_256') null, BigIntData bigint encrypted with (column_encryption_key = [CEK_Auto1], encryption_type = Deterministic, algorithm = 'AEAD_AES_256_CBC_HMAC_SHA_256') null, DecimalData decimal(38,0) encrypted with (column_encryption_key = [CEK_Auto1], encryption_type = Deterministic, algorithm = 'AEAD_AES_256_CBC_HMAC_SHA_256') null, BitData bit encrypted with (column_encryption_key = [CEK_Auto1], encryption_type = Deterministic, algorithm = 'AEAD_AES_256_CBC_HMAC_SHA_256') null, DateTimeData datetime encrypted with (column_encryption_key = [CEK_Auto1], encryption_type = Deterministic, algorithm = 'AEAD_AES_256_CBC_HMAC_SHA_256') null, DateTime2Data datetime2 encrypted with (column_encryption_key = [CEK_Auto1], encryption_type = Deterministic, algorithm = 'AEAD_AES_256_CBC_HMAC_SHA_256') null, GuidData uniqueidentifier encrypted with (column_encryption_key = [CEK_Auto1], encryption_type = Deterministic, algorithm = 'AEAD_AES_256_CBC_HMAC_SHA_256') null, ); |

I then created a small C# application to first insert data into the tables, and then to extract the encrypted values out of the tables.

using (Dal dal = new Dal(instanceName, databaseName, userName, password, true)) { dal.InsertVarcharAnalysis(null); for (int i = 0; i <= 8000; i++) { string data = new string('*', i); dal.InsertVarcharAnalysis(data); } } using (Dal dal = new Dal(instanceName, databaseName, userName, password, true)) { dal.InsertNVarcharAnalysis(null); for (int i = 0; i <= 4000; i++) { string data = new string('*', i); dal.InsertNVarcharAnalysis(data); } } using (Dal dal = new Dal(instanceName, databaseName, userName, password, true)) { dal.InsertVarbinaryAnalysis(null); byte[] template = new byte[8000]; Enumerable.Range(0, 8000).ToList().ForEach(i => template[i] = (byte)(i % 256)); for (int i = 0; i <= 8000; i++) { byte[] data = new byte[i]; Array.Copy(template, data, i); dal.InsertVarbinaryAnalysis(data); } } using (Dal dal = new Dal(instanceName, databaseName, userName, password, true)) { dal.InsertFixedSizeAnalysis(null, null, null, null, null, null, null, null, null); dal.InsertFixedSizeAnalysis(byte.MaxValue, short.MaxValue, int.MaxValue, long.MaxValue, decimal.MaxValue, true, DateTime.Now, DateTime.Now, Guid.NewGuid()); } |

In my Dal object’s constructor, the final parameter is a boolean value indicating whether to set the Column Encryption Setting to “Enabled”. This is necessary because the inserts must be done when encryption is enabled, but we need to read back the tables with encryption disabled so that we get back the raw, encrypted bytes instead of the decrypted data.

The analysis code looks like this:

using (Dal dal = new Dal(instanceName, databaseName, userName, password, false)) { List records = dal.ReadVarcharAnalysis(); int? previousRecordSize = int.MinValue; foreach (CharDataRecord record in records) { if (record.Data?.Length != previousRecordSize) { Console.WriteLine("{0} - {1}", record.Length, record.Data?.Length); previousRecordSize = record.Data?.Length; } } } using (Dal dal = new Dal(instanceName, databaseName, userName, password, false)) { List records = dal.ReadNVarcharAnalysis(); int? previousRecordSize = int.MinValue; foreach (CharDataRecord record in records) { if (record.Data?.Length != previousRecordSize) { Console.WriteLine("{0} - {1}", record.Length, record.Data?.Length); previousRecordSize = record.Data?.Length; } } } using (Dal dal = new Dal(instanceName, databaseName, userName, password, false)) { List records = dal.ReadVarbinaryAnalysis(); int? previousRecordSize = int.MinValue; foreach (CharDataRecord record in records) { if (record.Data?.Length != previousRecordSize) { Console.WriteLine("{0} - {1}", record.Length, record.Data?.Length); previousRecordSize = record.Data?.Length; } } } using (Dal dal = new Dal(instanceName, databaseName, userName, password, false)) { List records = dal.ReadFixedSizeAnalysis(); foreach (FixedSizeDataRecord record in records) { Console.WriteLine("TinyInt: {0}", record.TinyIntData?.Length); Console.WriteLine("SmallInt: {0}", record.SmallIntData?.Length); Console.WriteLine("Int: {0}", record.IntData?.Length); Console.WriteLine("BigInt: {0}", record.BigIntData?.Length); Console.WriteLine("Decimal: {0}", record.DecimalData?.Length); Console.WriteLine("Bit: {0}", record.BitData?.Length); Console.WriteLine("DateTime: {0}", record.DateTimeData?.Length); Console.WriteLine("DateTime2: {0}", record.DateTime2Data?.Length); Console.WriteLine("Guid: {0}", record.GuidData?.Length); } } |

Results

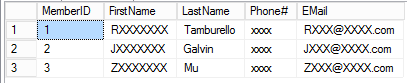

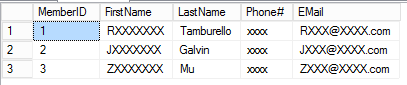

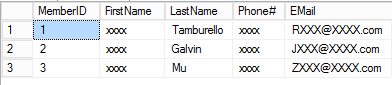

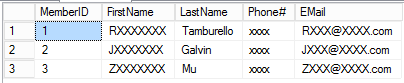

Let’s start by looking at the varchar data. Here are the first few rows in the table:

One thing to note is that, not surprisingly, a NULL input to the encryption function yields a NULL output This was true everywhere in this test. However, a blank string (0-length) generates 65 bytes of output, as does an input containing 1 character, 2 characters, etc. At 16 characters of input, the output grows to 81 bytes, and when input reaches 32 characters the output is 97 bytes.

| Input Size (characters) | Output Size (bytes) |

|---|---|

| 0 | 65 |

| 16 | 81 |

| 32 | 97 |

| 48 | 113 |

| 64 | 129 |

| 80 | 145 |

| 96 | 161 |

| 112 | 177 |

| 128 | 193 |

The pattern is quickly apparent. We can express this relationship very simply as:

C = 16 * floor(P / 16) + 65

where P is the input plaintext size in characters and C is the output cryptotext size in bytes.

Next, the nvarchar data. This has similar growth patterns but, not surprisingly, the encryption output grows at 8-character boundaries.

| Input Size (characters) | Output Size (bytes) |

|---|---|

| 0 | 65 |

| 8 | 81 |

| 16 | 97 |

| 24 | 113 |

| 32 | 129 |

| 40 | 145 |

| 48 | 161 |

| 56 | 177 |

| 64 | 193 |

| 72 | 209 |

C = 16 * floor(P / 8) + 65

This is the same result as before taking into account that each input character is now two bytes instead of one.

Varbinary data, as you might expect, follows the same pattern as varchar data:

| Input Size (characters) | Output Size (bytes) |

|---|---|

| 0 | 65 |

| 16 | 81 |

| 32 | 97 |

| 48 | 113 |

| 64 | 129 |

| 80 | 145 |

| 96 | 161 |

| 112 | 177 |

| 128 | 193 |

Finally, let’s look at fixed-width data. It turns out that the tinyint, smallint, int and bigint data types now occupy 65 bytes, as do bit, datetime and datetime2 columns. The decimal and uniqueidentifier data types require 81 bytes when they are encrypted. Again, this fits our pattern we have observed so far.

| Data Type | Unencrypted (bytes) | Encrypted (bytes) |

|---|---|---|

| tinyint | 1 | 65 |

| smallin | 2 | 65 |

| int | 4 | 65 |

| bigint | 8 | 65 |

| decimal(38,0) | 17 | 81 |

| bit | 0.125 | 65 |

| datetime | 8 | 65 |

| datetime2 | 8 | 65 |

| uniqueidentifier | 16 | 81 |

Conclusions

The size of the encrypted data throughout this analysis is very consistently

C = 16 * floor(P / 16) + 65

where P is the size, in bytes, of the unencrypted data, and C is the resulting size, again in bytes, of the encrypted data. This means that small inputs incur a significant amount of overhead, but as the input grows the overhead becomes only a tiny fraction. The greatest overhead is observed with the bit data type, where the encrypted data size is 520 times the unencrypted data size.

Coda

You may have noticed that I created all of the tables with the encryption type set to Deterministic. I re-ran this test using Randomized encryption throughout, and the result are identical.

Observe that these results are based on the encrypted data size after the initial insert into the table. I’m not sure if there is anything later in the process, such as key rotation, that may cause the data size to grow, so keep that limitation in mind.