We had an interesting situation at work recently where a SQL Agent went into what I would call a “zombie state” where the service was actually still alive and running but not really doing much. Scheduled jobs weren’t being executed. Well, mostly. It did appear that some jobs were going and others just sat there for several days without running. If a backup job doesn’t run, it’s a big deal.

I still don’t understand quite what happened, and likely never will since so much of SQL Agent is a black box and the various logs didn’t reveal a whole lot. Based on internet searches, this happens to folks from time to time, and rebooting the server is the general solution, which worked for us. But there doesn’t seem to be much out there about how to alert if the problem happens.

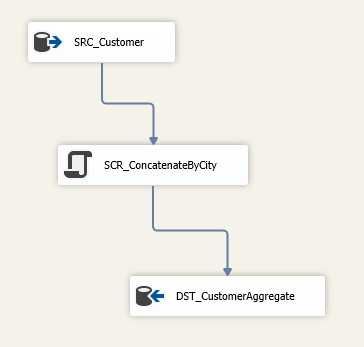

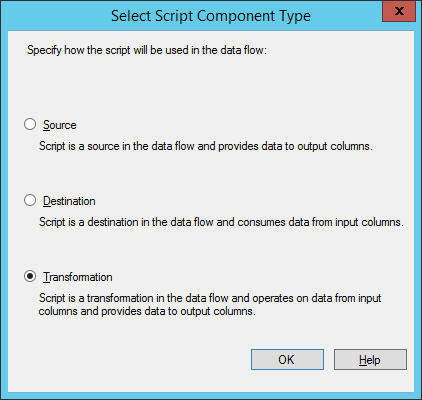

Such a monitor initially seemed like it would be a really simple thing. The more I dug in, however, it turned out to be considerably more involved than it first appeared, primarily for two reasons. The first is that the scheduling metadata is a bit complicated, and unraveling it is far more than just a simple query. Second, as I created the solution in SSIS, I came to realize that would need to write was basically amounted to a custom aggregate, something I’d not needed to do before and which was a learning process for me.

This is going to be a three-part post. I’ll address the first difficulty in this post, and the aggregation difficulty in the next one. Finally, I’ll pull it all together into the final monitoring package.

Scheduling Metadata

Job metadata is stored in msdb. There is a dbo.sysjobs table that stores one row per job. The dbo.sysschedules table stores the scheduling information for jobs, and dbo.sysjobschedules ties the other two together. All of these tables are well documented in BOL. There is also a sysjobhistory table to store the history, but we aren’t going need that. We are going to have use the undocumented xp_sqlagent_enum_jobs procedure to get some live job information.

For now, I’ll focus on sysschedules since that contains the meat of the scheduling metadata. The key bit of information here the freq_type column which indicates how frequently the schedule is activated. I am only concerned about recurring schedules, so the values I’m interested in are 4 (daily), 8 (weekly), 16 (monthly using days of the month) and 32 (monthly using weeks of the month). Some of the other columns in the table get interpreted in different ways depending on the value of freq_type. Most notably, the freq_interval columns gets used in varying ways for different frequency types.

Why Do We Even Care?

Why do we even need to parse through the scheduling metadata? It’s because SQL Server doesn’t have any built-in functionality (at least so far as I’ve been able to discover) to determine the next time a schedule should fire after an arbitrary date. There are ways, including with sp_help_job, to identify the next time a job should run, but this basically determines the next time the job should run from the current time. This doesn’t particularly help us.

For example, suppose a job last ran on January 1 and should fire every week. If today is January 20, SQL will tell us that the next run will be on January 22. Without knowing how to read through the schedule metadata, we don’t know that anything was missed. Knowing that the job runs every week, we can compute that the next time should be on January 8. Since this date is in the past, we know that some runs got skipped.

So the general idea for the monitor is this. We will identify all jobs that are enabled and that have at least one recurring schedule that is enabled. We will determine the last time that the job executed, and determine when it should run next. If the next run date is in the past, something happened, and we need to send an alert.

We will not consider any jobs are that currently executing. It is entirely possible to miss a scheduled run because the job is already active, and this monitor doesn’t care about that. However, it is a good idea to separately monitor for jobs that get stuck and run beyond what is normal.

Forecasting Schedules

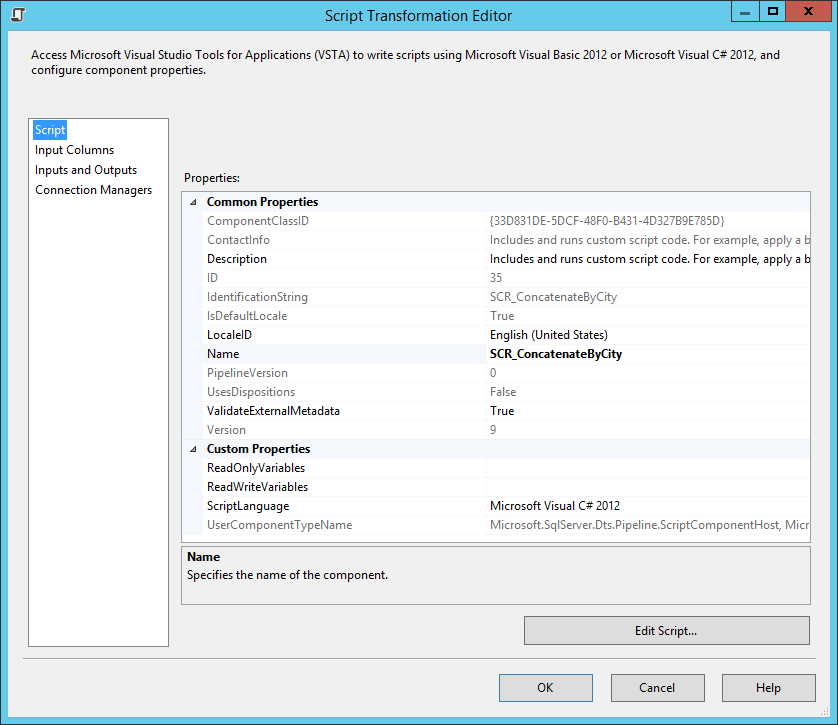

I initially started writing the forecasting logic in T-SQL, but it very quickly got ugly and I switched to C#. It got ugly anyway. But as it stands now, the solution is in C# code that I later integrated into a script task in SSIS.

For now, we will ignore the fact that a job can have multiple schedules, which we address in Part 2 of this article. The code that follows treats one schedule at a time. Later, we will combine any multiple schedules for the job together.

The code consists of a single static class called SqlAgentSchedule with a single public method called Forecast. The signature of Forecast basically takes the values of the relevant columns from sysschedules.

public static List<DateTime> Forecast(

DateTime startingFromDate,

int type,

int interval,

int subdayType,

int subdayInterval,

int relativeInterval,

int recurrenceFactor,

int? startDateInt,

int? endDateInt,

int? startTimeInt,

int? endTimeInt) |

public static List<DateTime> Forecast(

DateTime startingFromDate,

int type,

int interval,

int subdayType,

int subdayInterval,

int relativeInterval,

int recurrenceFactor,

int? startDateInt,

int? endDateInt,

int? startTimeInt,

int? endTimeInt)

The method returns a list of the next few times that the job should run. Specifically, it will return, at a minimum, any remaining times that the job should run today as well any times that the job should run on the next scheduled day. This is definitely overkill, but will guarantee that the returned list contains at least one item, except in the case where the job’s end date has passed.

After doing some simple computation to determine the start and end dates and times for the job, we call one of four different functions to compute the schedule based on the freq_type value. Finally, we filter out any run times that are prior to the specified start time.

List<DateTime> forecast = null;

switch (type)

{

case 4:

forecast = ForecastDailySchedule(

startingFromDate.Date,

interval,

subdayType,

subdayInterval,

startDate.Value,

endDate.Value);

break;

case 8:

forecast = ForecastWeeklySchedule(

startingFromDate.Date,

interval,

subdayType,

subdayInterval,

recurrenceFactor,

startDate.Value,

endDate.Value);

break;

case 16:

forecast = ForecastMonthlyByDaysSchedule(

startingFromDate.Date,

interval,

subdayType,

subdayInterval,

recurrenceFactor,

startDate.Value,

endDate.Value);

break;

case 32:

forecast = ForecastMonthlyByWeeksSchedule(

startingFromDate.Date,

interval,

subdayType,

subdayInterval,

relativeInterval,

recurrenceFactor,

startDate.Value,

endDate.Value);

break;

default:

return null;

}

forecast = forecast.Where(d => d > startingFromDate).ToList(); |

List<DateTime> forecast = null;

switch (type)

{

case 4:

forecast = ForecastDailySchedule(

startingFromDate.Date,

interval,

subdayType,

subdayInterval,

startDate.Value,

endDate.Value);

break;

case 8:

forecast = ForecastWeeklySchedule(

startingFromDate.Date,

interval,

subdayType,

subdayInterval,

recurrenceFactor,

startDate.Value,

endDate.Value);

break;

case 16:

forecast = ForecastMonthlyByDaysSchedule(

startingFromDate.Date,

interval,

subdayType,

subdayInterval,

recurrenceFactor,

startDate.Value,

endDate.Value);

break;

case 32:

forecast = ForecastMonthlyByWeeksSchedule(

startingFromDate.Date,

interval,

subdayType,

subdayInterval,

relativeInterval,

recurrenceFactor,

startDate.Value,

endDate.Value);

break;

default:

return null;

}

forecast = forecast.Where(d => d > startingFromDate).ToList();

The devil is in those schedule type-specific forecast functions. Disclaimer: I don’t by any means guarantee that the code is right, especially for some edge-case scenarios. I am also quite unsure about the exact logic that SQL uses for weekly and monthly schedules where the “relative interval” is greater than 1.

This code is also pretty raw; it could definitely stand a good refactoring. I just haven’t had the time to invest in that effort. That said, the code should be functional as-is.

General Approach to Forecasting Schedules

The idea here is that we first need to find the first day on or after the “starting from date” value sent into the function that meets the schedule criteria. Each of the schedule types have a parameter indicating how often the schedule recurs. For daily schedules, this is the freq_interval column; for weekly and monthly schedules it is the freq_recurrence_factor columns. I’ll call it the Recurrence Interval in the following discussion.

We first compute the number of days (or weeks, or months) that have elapsed since the Start Date for the schedule, and then take this number modulo the Recurrence Interval. If the modulo is non-zero, we add enough days, weeks or months to get to the next multiple of Recurrence Interval. For weekly and monthly schedules, this may result in a date that doesn’t meet some of the other qualifications, so we need to keep advancing the date until all of the criteria are satisfied.

Next, we compute the next date that the schedule should run, either in the current current Recurrence Interval or in the next one.

Schedules also have information about when the job should run within a day. This is indicated by the freq_subday_type column. A value of 1 indicates that the schedule does not recur within the day and should just run once. Other values indicate the number of time units that are represented by the freq_subday_interval column. A freq_subday_type of 2 indicates that the time units are seconds; 4 represents minutes; and 8 represents hours.

We also have the active_start_time and active_end_time columns that indicate when in the day the schedule starts and stops. These are integer values that indicate the times using the formula hour * 10000 + minute * 100 + second, so we have to reverse this formula to get the times in to usable formats. So then we begin at the start time each day and go to the end time at freq_subday_interval seconds/minutes/hours and add an entry to the forecast for each one.

A special case is when end time is less than the start time. This seems to mean that SQL will run the job into the following day until the end time, so we also have to account for that.

The code to handle the sub-day time logic is as follows.

TimeSpan subDayFrequency = GetSubdayFrequency(subdayType, subdayInterval);

TimeSpan startTime = startDate.TimeOfDay;

TimeSpan endTime = endDate.TimeOfDay;

if (endTime < startTime)

{

endTime = endTime.Add(_oneDay);

} |

TimeSpan subDayFrequency = GetSubdayFrequency(subdayType, subdayInterval);

TimeSpan startTime = startDate.TimeOfDay;

TimeSpan endTime = endDate.TimeOfDay;

if (endTime < startTime)

{

endTime = endTime.Add(_oneDay);

}

With this information we can loop through and find all times that meet the schedule criteria within the time span:

for (TimeSpan time = startTime; time <= endTime; time = time + subDayFrequency)

{

forecast.Add(nextRunDate + time);

} |

for (TimeSpan time = startTime; time <= endTime; time = time + subDayFrequency)

{

forecast.Add(nextRunDate + time);

}

GetSubdayFrequency is defined as follows. Note that in the case of a one-time schedule (sub-day type 1) we use a bit of hack and defines the recurrence as the number of seconds in a day. This guarantees that we will only get one hit in the current day.

private static TimeSpan GetSubdayFrequency(int subdayType, int subdayInterval)

{

int seconds = 0;

switch (subdayType)

{

case 1:

seconds = 24 * 60 * 60;

break;

case 2:

seconds = subdayInterval;

break;

case 4:

seconds = subdayInterval * 60;

break;

case 8:

seconds = subdayInterval * 60 * 60;

break;

default:

throw new ApplicationException("invalid subdayType.");

}

return new TimeSpan(0, 0, seconds);

} |

private static TimeSpan GetSubdayFrequency(int subdayType, int subdayInterval)

{

int seconds = 0;

switch (subdayType)

{

case 1:

seconds = 24 * 60 * 60;

break;

case 2:

seconds = subdayInterval;

break;

case 4:

seconds = subdayInterval * 60;

break;

case 8:

seconds = subdayInterval * 60 * 60;

break;

default:

throw new ApplicationException("invalid subdayType.");

}

return new TimeSpan(0, 0, seconds);

}

Daily Schedules

The daily schedule is by far the simplest to implement. Since the job simply runs every x days, really all we need to do is to determine the next day on a multiple of x from the schedule start date.

int daysElapsed = (int)((startingFromDate - startDate.Date).TotalDays);

int daysInCurrentInterval = daysElapsed % interval;

int daysUntilNextRun = daysInCurrentInterval == 0 ? 0 : interval - daysInCurrentInterval;

DateTime nextRunDate = startingFromDate.AddDays(daysUntilNextRun); |

int daysElapsed = (int)((startingFromDate - startDate.Date).TotalDays);

int daysInCurrentInterval = daysElapsed % interval;

int daysUntilNextRun = daysInCurrentInterval == 0 ? 0 : interval - daysInCurrentInterval;

DateTime nextRunDate = startingFromDate.AddDays(daysUntilNextRun);

Weekly Schedules

With a weekly schedule, things start off pretty much the same as for daily. If a schedules fires every x weeks, it’s really the same thing as saying the schedule files every 7x days, so the logic is pretty much the same as before. Remember, we now need to use the freq_recurrence_factor column instead of the freq_interval column.

int daysElapsed = (int)((startingFromDate - startDate.Date).TotalDays);

int daysInCurrentInterval = daysElapsed % (recurrenceFactor * 7);

int daysUntilNextRun = daysInCurrentInterval == 0 ? 0 : (recurrenceFactor * 7) - daysInCurrentInterval;

DateTime nextRunDate = startingFromDate.AddDays(daysUntilNextRun); |

int daysElapsed = (int)((startingFromDate - startDate.Date).TotalDays);

int daysInCurrentInterval = daysElapsed % (recurrenceFactor * 7);

int daysUntilNextRun = daysInCurrentInterval == 0 ? 0 : (recurrenceFactor * 7) - daysInCurrentInterval;

DateTime nextRunDate = startingFromDate.AddDays(daysUntilNextRun);

But recall that we can specify which days of the week that job runs, so we have to find the next day within the week that qualifies. The freq_interval column is used to identify the days of the week that are selected as a bitmap. I have a C# flag-based enum called SqlDayOfWeek that directly maps to the bitmap.

SqlDayOfWeek dayOfWeek = (SqlDayOfWeek)interval;

nextRunDate = AdjustToNextMatchingDayOfWeekInValidWeek(nextRunDate, dayOfWeek, startDate, recurrenceFactor); |

SqlDayOfWeek dayOfWeek = (SqlDayOfWeek)interval;

nextRunDate = AdjustToNextMatchingDayOfWeekInValidWeek(nextRunDate, dayOfWeek, startDate, recurrenceFactor);

The function that is called basically just the checks the date passed in to see if it matches both the scheduled days as well as the recurrence interval. If not, we advance to the next day and keep on rechecking. It’s a brute force approach.

private static DateTime AdjustToNextMatchingDayOfWeekInValidWeek(DateTime currentDate, SqlDayOfWeek sqlDayOfWeek, DateTime startDate, int recurrenceFactor)

{

bool isDone = false;

while (isDone == false)

{

currentDate = AdjustToNextMatchingDayOfWeek(currentDate, sqlDayOfWeek);

int weeksElapsed = (int)((currentDate - startDate.Date).TotalDays) / 7;

if ((weeksElapsed % recurrenceFactor) == 0)

{

isDone = true;

}

else

{

currentDate = currentDate.AddDays(1);

}

}

return currentDate;

}

private static DateTime AdjustToNextMatchingDayOfWeek(DateTime currentDate, SqlDayOfWeek sqlDayOfWeek)

{

while (IsDayOfWeekMatch(currentDate.DayOfWeek, sqlDayOfWeek) == false)

{

currentDate = currentDate.AddDays(1);

}

return currentDate;

}

private static bool IsDayOfWeekMatch(DayOfWeek dow, SqlDayOfWeek sql)

{

return (dow == DayOfWeek.Sunday && sql.HasFlag(SqlDayOfWeek.Sunday)) ||

(dow == DayOfWeek.Monday && sql.HasFlag(SqlDayOfWeek.Monday)) ||

(dow == DayOfWeek.Tuesday && sql.HasFlag(SqlDayOfWeek.Tuesday)) ||

(dow == DayOfWeek.Wednesday && sql.HasFlag(SqlDayOfWeek.Wednesday)) ||

(dow == DayOfWeek.Thursday && sql.HasFlag(SqlDayOfWeek.Thursday)) ||

(dow == DayOfWeek.Friday && sql.HasFlag(SqlDayOfWeek.Friday)) ||

(dow == DayOfWeek.Saturday && sql.HasFlag(SqlDayOfWeek.Saturday));

} |

private static DateTime AdjustToNextMatchingDayOfWeekInValidWeek(DateTime currentDate, SqlDayOfWeek sqlDayOfWeek, DateTime startDate, int recurrenceFactor)

{

bool isDone = false;

while (isDone == false)

{

currentDate = AdjustToNextMatchingDayOfWeek(currentDate, sqlDayOfWeek);

int weeksElapsed = (int)((currentDate - startDate.Date).TotalDays) / 7;

if ((weeksElapsed % recurrenceFactor) == 0)

{

isDone = true;

}

else

{

currentDate = currentDate.AddDays(1);

}

}

return currentDate;

}

private static DateTime AdjustToNextMatchingDayOfWeek(DateTime currentDate, SqlDayOfWeek sqlDayOfWeek)

{

while (IsDayOfWeekMatch(currentDate.DayOfWeek, sqlDayOfWeek) == false)

{

currentDate = currentDate.AddDays(1);

}

return currentDate;

}

private static bool IsDayOfWeekMatch(DayOfWeek dow, SqlDayOfWeek sql)

{

return (dow == DayOfWeek.Sunday && sql.HasFlag(SqlDayOfWeek.Sunday)) ||

(dow == DayOfWeek.Monday && sql.HasFlag(SqlDayOfWeek.Monday)) ||

(dow == DayOfWeek.Tuesday && sql.HasFlag(SqlDayOfWeek.Tuesday)) ||

(dow == DayOfWeek.Wednesday && sql.HasFlag(SqlDayOfWeek.Wednesday)) ||

(dow == DayOfWeek.Thursday && sql.HasFlag(SqlDayOfWeek.Thursday)) ||

(dow == DayOfWeek.Friday && sql.HasFlag(SqlDayOfWeek.Friday)) ||

(dow == DayOfWeek.Saturday && sql.HasFlag(SqlDayOfWeek.Saturday));

}

Monthly Schedules (Day of Month)

We follow the same basic principle here, with the slight complication of having to deal with rollover into a new year. There is an interesting twist here. Suppose that I schedule a job to fire on the 31st of every month, starting in January, and recurring every three months. The next time the job should run is then “April 31,” a non-existent date. SQL Server will simply skip this invalid date, and next run on July 31, so the code has to handle this odd case.

Monthly Schedules (Week of Month)

This type of schedule takes a little bit more effort to interpret. I define another enumeration called SqlWeek that wraps the relativeInterval parameter, and then a couple of helper methods to determine the days of the week the job should run and to compute the next run date.

SqlDayOfWeek dayOfWeek = DayOfWeekFromRelativeMonthDay(interval);

SqlWeek week = (SqlWeek)relativeInterval;

DateTime nextRunDate = GetRelativeDayInMonth(nextRunYear, nextRunMonth, week, dayOfWeek); |

SqlDayOfWeek dayOfWeek = DayOfWeekFromRelativeMonthDay(interval);

SqlWeek week = (SqlWeek)relativeInterval;

DateTime nextRunDate = GetRelativeDayInMonth(nextRunYear, nextRunMonth, week, dayOfWeek);

The helper methods are as follows.

private static SqlDayOfWeek DayOfWeekFromRelativeMonthDay(int interval)

{

switch (interval)

{

case 1:

return SqlDayOfWeek.Sunday;

case 2:

return SqlDayOfWeek.Monday;

case 3:

return SqlDayOfWeek.Tuesday;

case 4:

return SqlDayOfWeek.Wednesday;

case 5:

return SqlDayOfWeek.Thursday;

case 6:

return SqlDayOfWeek.Friday;

case 7:

return SqlDayOfWeek.Saturday;

case 8:

return SqlDayOfWeek.Day;

case 9:

return SqlDayOfWeek.Weekday;

case 10:

return SqlDayOfWeek.WeekendDay;

}

}

private static DateTime GetRelativeDayInMonth(int year, int month, SqlWeek week, SqlDayOfWeek dayOfWeek)

{

int lowerDayInclusive;

int upperDayInclusive;

int lastDayInMonth = DateTime.DaysInMonth(year, month);

switch (week)

{

case SqlWeek.First:

lowerDayInclusive = 1;

upperDayInclusive = 7;

break;

case SqlWeek.Second:

lowerDayInclusive = 8;

upperDayInclusive = 14;

break;

case SqlWeek.Third:

lowerDayInclusive = 15;

upperDayInclusive = 21;

break;

case SqlWeek.Fourth:

lowerDayInclusive = 22;

upperDayInclusive = 28;

break;

case SqlWeek.Last:

lowerDayInclusive = lastDayInMonth - 6;

upperDayInclusive = lastDayInMonth;

break;

default:

throw new ApplicationException("Invalid week.");

}

for (int day = lowerDayInclusive; day <= upperDayInclusive; day++)

{

DateTime date = new DateTime(year, month, day);

if (IsDayOfWeekMatch(date.DayOfWeek, dayOfWeek))

{

return date;

}

}

} |

private static SqlDayOfWeek DayOfWeekFromRelativeMonthDay(int interval)

{

switch (interval)

{

case 1:

return SqlDayOfWeek.Sunday;

case 2:

return SqlDayOfWeek.Monday;

case 3:

return SqlDayOfWeek.Tuesday;

case 4:

return SqlDayOfWeek.Wednesday;

case 5:

return SqlDayOfWeek.Thursday;

case 6:

return SqlDayOfWeek.Friday;

case 7:

return SqlDayOfWeek.Saturday;

case 8:

return SqlDayOfWeek.Day;

case 9:

return SqlDayOfWeek.Weekday;

case 10:

return SqlDayOfWeek.WeekendDay;

}

}

private static DateTime GetRelativeDayInMonth(int year, int month, SqlWeek week, SqlDayOfWeek dayOfWeek)

{

int lowerDayInclusive;

int upperDayInclusive;

int lastDayInMonth = DateTime.DaysInMonth(year, month);

switch (week)

{

case SqlWeek.First:

lowerDayInclusive = 1;

upperDayInclusive = 7;

break;

case SqlWeek.Second:

lowerDayInclusive = 8;

upperDayInclusive = 14;

break;

case SqlWeek.Third:

lowerDayInclusive = 15;

upperDayInclusive = 21;

break;

case SqlWeek.Fourth:

lowerDayInclusive = 22;

upperDayInclusive = 28;

break;

case SqlWeek.Last:

lowerDayInclusive = lastDayInMonth - 6;

upperDayInclusive = lastDayInMonth;

break;

default:

throw new ApplicationException("Invalid week.");

}

for (int day = lowerDayInclusive; day <= upperDayInclusive; day++)

{

DateTime date = new DateTime(year, month, day);

if (IsDayOfWeekMatch(date.DayOfWeek, dayOfWeek))

{

return date;

}

}

}

Summary

Well, that was an awful lot of work just get the next run time following an arbitrary date, and this was definitely far more work than I expected.

In Part 2 of this post, I’ll show how I built a custom aggregate in SSIS. In Part 3, I’ll wrap the C# code into an SSIS package and pull everything together.